Touchless interface as a new age UX approach or a communication method for a SaaS front end

This article will tell you about some of the approaches and development examples dragged around how to build a touchless user interface for SaaS development and show how and where it can be applied.

What is a touchless interface?

The touchless user interface meaning is quite simple and we are sure that you face it even with your devices from time to time. Usually, touchless UI for saas is used either for biometry, avatars, animojis, social media, or security. All the solutions of saas touchless user interface technology have always fought and will fight for a convenient, user-friendly interface. This is due to the fact that we are developers of IT products that always strive to save people time and make their work easy and understandable convenient and fast. And today we live in that very happy time when finally you can start making a saas touchless UI solution that will not cost a lot of money and can be implemented in a fairly short time. It used to be extremely difficult and expensive. Today it is much cheaper mainly due to the development of Open Source.

The touchless interface is also good for reasons of hygiene and is especially convenient to use in places of high traffic, large crowds, big cities, as this allows people not to touch the same places.

Touchless UI use cases for SaaS

There are 4 fundamentally different applications:

-

For example, Retail business, shops, or any other location where you need to serve a client, advise him, and eventually make a sale.

-

Information projects. For example, kiosks standing at the airport or in some other places that allow a person to receive the necessary information about the city or about tickets or flights. As an option, these can be educational projects located in museums or educational institutions where a person approaches the device and makes inquiries and must receive information.

-

Contactless control system for any device. Most often these are IoT projects, where a person interacts with some device and manages it without pressing buttons (ie “touchless”).

-

A separate very specific category is simulation training systems, where the task is to simulate a situation and teach a person how to act in it.

Over the past 3 years, we have gained experience in developing systems in all four categories, and in this article I will overview what solutions can be used for all types of such systems.

Features of touchless UI

Most of them are known and now we use them daily in our phones, intercoms, and other devices. But let's take a closer look.

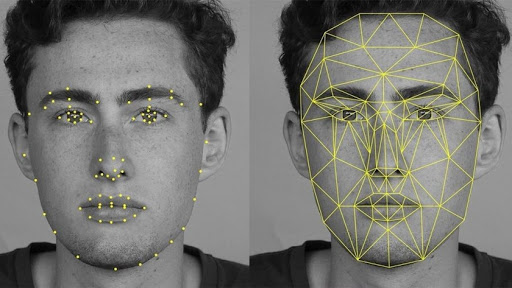

Face Detection

One of the simplest and most common is the definition of a face. Detection is fundamentally different from Recognition in that it only determines the fact of the presence of a person. So we can fix that a person is next to the device but we do not establish his identity, that is, we do not recognize his identity by his face.

Naturally, this requires a constantly-on and active camera that continuously supplies a video stream, and the software continuously tries to determine if there is a person in the frame or not. To determine the face, you do not need any particularly good camera quality. You can use almost minimal or medium resolution, and the quality of the picture itself does not matter, as the face detection still works in black and white. To get normal pictures in the dark, use infrared illumination This should be built into the camera itself.

Detecting intention

Also, the rule of good form is to track the intentions of a person. You should not react instantly to the appearance of a person in the frame because a person can pass by and do not want to use the device at all. In one of our projects, we decided it is very simple. We made a 2-second delay if the person is present in the frame for more than 2 seconds from the moment of determination, then we believe that the person has stopped and you can start interacting with him. By the way, in this project, after a delay, a video call was made to a call center and for this we used Twilio.

Line of sight, emotion, age, and gender recognition

You can go further and respond to other facial characteristics such as age and gender. Open Source already has projects that vary qualitatively determine gender by face and pretty well determine the age range. It’s impossible to determine the exact age today. We are currently working on a retail project for stores where age and gender are used to personalize content.

Another option is the direction of the person’s gaze and his emotions. In the Open Source world, there are already models that recognize human emotions that can be quite easily used for various triggers. For example, depending on the emotions of a person, you can offer various content, offer or not offer to advertise, various products are possible, give an assessment of the quality of service and do some other things.

At the moment, we are working on a project where the direction of the gaze of a person standing in front of a large monitor is determined. We do this without using VR technology or Microsoft Kinect, i.e. only through a camcorder. With the help of this development, you can attach the mouse cursor to the direction of view and the person will use the eyes to move the cursor on the screen, and to press it, he can stop and delay for a while. This approach has long been used in virtual reality systems, but it is much more difficult to implement it only with a video camera without additional devices.

Face recognition

If it’s not enough just to detect a face, and you need to determine the identity of a person, for example, to establish specifically the user who approached the device, then full recognition is needed. Such a task is much more complex and will require a server since it is unrealistic to do this in the application because it is necessary to keep a large database of people and the local machine or mobile device will not cope with such a load.

Voice Recognition

Voice recognition is a bit more complicated, but it is now very interesting for many projects because it speeds up and simplifies interaction with the software. Here is the first question: can this be implemented in the application itself in our case using javascript or will it be necessary to create a server that will process the voice and return a response? Two options are possible here:

if you need a fixed set of standard commands - this is generally easy and an application is enough for this (a server is not needed). Also, if you need to make voice control with a relatively small set of specific commands, this can also be easily solved without a server. But it will be necessary to train the neural network specifically for the teams needed in this project.

If you need a large number of specific words (for example, street names) or support for several languages, you will have to create your own server that will process this voice and pay extra for a neural network. This is likely to be a big complicated expensive project. Therefore, it is more logical to use existing services such as Google or Azur. Of course, they cost money, but for a startup or for a project at an early stage of development, this is much less risky.

Palm and finger gestures as a way to operate interfaces

In some cases, it may be convenient to control the program using hand gestures. Already there are developments that do it well enough. The model is trained and the recognition accuracy is high. Our favorite technology stack to implement this Python along with Keras + Tensorflow / Theano + OpenCV.

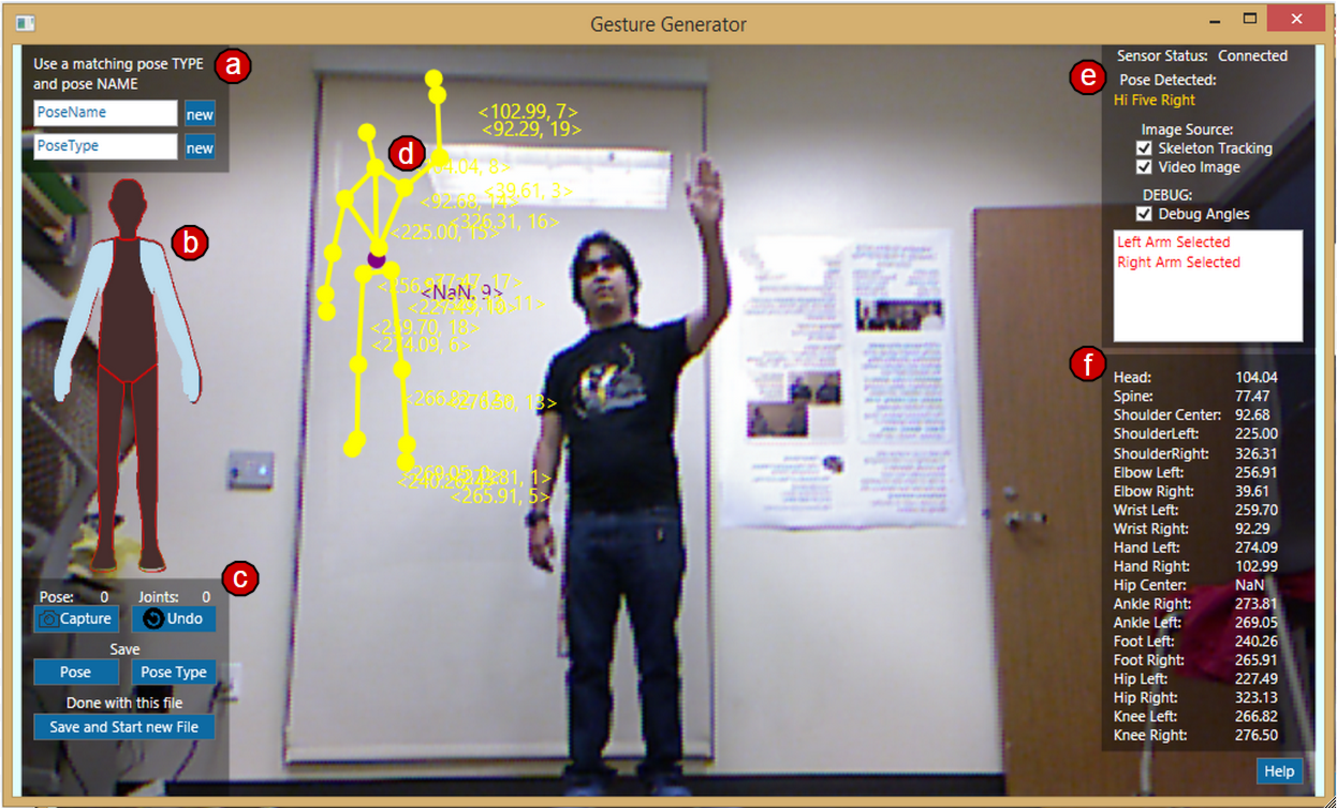

Body gesture recognition

A more exotic option is to manage the product using gestures of the whole body, such as arms or legs, or postures that a person takes. This is unlikely to be useful for a classic program. But it can be widely used to develop simulation systems. We had a project where we had to do Tracking of referee's gestures for training soccer referees - in this project we used virtual reality with HTC trackers and with the help of them we determined both the person’s running speed and all the referee’s gestures. For simple gestures, elementary geometry is sufficient. But if you need to distinguish a wide variety of complex poses and gestures, you will have to develop artificial intelligence and then train it.

Custom hardware

For all such projects, in 99% of cases, a video camera is used, which must be integrated into the device. The video stream needs to be correctly processed and either sent all to the server or processed on the device itself or sent only individual frames; for this, you often have to deal with the hardware part. We work with a company that has extensive experience in the development of electronics of this nature and especially specializes in processing video data. Therefore, if your task requires software and hard, we can easily do this and create a device from scratch or modify existing ones and also develop all the necessary soft.

Technologies we use for touchless user interface development

In most cases, all this will work on a tablet or phone that will have either iOS or Android. But also it can be any other device on which most likely there will be either Android or other Windows or Unix operating systems. In our projects, we have come across devices on iOS as well as smartphones and tablets on Android, but also absolutely custom devices on Unix.

React Native

To develop applications on all of these operating systems, we use React Native because, firstly, it is a javascript that many people know, and secondly, you can compile the project under Android and under iOS and standalone-application under Windows or Unix. We believe that today this is the best solution because it allows you to overlap all operating systems with one source code, while it is very common much easier to find developers and there are a huge number of components and libraries for it. For react native, there is already an Open Source development of the definition of a person in the frame. This is a simple task that does not require a large number of resources, so a server is not needed for this and everything can work in the application itself.

For react native, there is already an Open Source development of the definition of a person in the frame. This is a simple task that does not require a large number of resources, so a server is not needed for this and everything can work in the application itself.

Twilio

We really love this service and after using it in several projects we learned how to work effectively with it. When we develop a SaaS application that requires video calls, we definitely choose Twillio. For maintenance tasks, the touchless interface in combination with Twillio video calls can quickly and fairly cheaply develop an application that connects a user with any kind of agent or consultant. If you add voice recognition with voice bots to that, you can fully automate the process of consulting and communication. By the way, in Twilio there is already the opportunity to create AI bots to automate customer service.

Our Experience

We had a project in which we developed a server that accepts photos of people from the device and our task was to recognize people from an existing database and also replenish it. This device has been used in various company schools or other institutions with a limited number of users. All were entered into the database along with their photos. Our server received the image from the device’s camera, calculated its features, and searched the database to find the most similar face. Thus, we were able to determine with a 70-80% probability which the user was suitable for the device. And to ensure such accuracy, we needed the Dlib libraries. For the Production mode, such accuracy is not enough, and to increase we collected photos from our users, saved their properties in the database, and with them made our solution “smarter” to increase recognition accuracy. This project Soapy is an excellent device, and we hope that great success awaits them!

There was another project, where we developed voice control systems, where we trained her several key phrases with a sample of only 10 people. The training was conducted in ideal conditions, and in production gave a recognition accuracy of 40-60%. Of course, in reality, many more different people are needed for quality training. But we were very pleased. The system perfectly-recognized our specific commands, despite the fact that it worked without a server; everything was done on the native react. The main conclusion that I want to make is that today's voice control is much cheaper than many people think.

Final Thoughts

For a team that develops various software, we simply do not have the right not to master the technology of touchless interaction with devices. This can begin with motion recognition and end with an understanding of voice commands and definitions of the retina.

One way or another, in the near future it will be present even in the most primitive projects. Our dedicated development team has already begun to master this science and is making progress. Therefore, if this becomes part of your next project, we are ready for this. Share with our manager what kind of touchless interface technology for saas you need!